Page Not Found

Page not found. Your pixels are in another canvas.

A list of all the posts and pages found on the site. For you robots out there is an XML version available for digesting as well.

Page not found. Your pixels are in another canvas.

Dylan Paiton

This is a page not in th emain menu

Published:

Published:

Published in IEEE Southwest Symposium on Image Analysis and Interpretation, 2012

Labeling videos with objects using independent color/texture and shape/form processing streams.

Published in The International Joint Conference on Neural Networks (IJCNN), 2013

Computer simulations of distributed sensor networks using a retina-inspired communication protocol to amplify signals.

Published in arXiv Preprint, 2014

Exploring the tradeoff of patch size, stride, and overcompleteness in convolutional sparse coding.

Published in IEEE Southwest Symposium on Image Analysis and Interpretation (SSIAI), 2016

A convolutional sparse coding network facilitates better depth inference than comparable feedforward networks.

Published in Proceedings of the 9th EAI International Conference on Bio-inspired Information and Communications Technologies, 2016

A hierarchical sparse coding network that learns bandpass decompositions of natural images.

Published in Data Compression Conference, 2018

A convolutional autoencoder with divisive normalization enables digital image storage on simulated emerging memristive devices.

Published in Neural Information Processing Systems, 2018

A hierarchical sparse coding model that decomposes scenes into constituent parts and linearizes temporal trajectories of natural videos.

Published in IEEE International Electron Devices Meeting (IEDM), 2018

The first published approach for storing digital natural images onto resistive random access memory arrays.

Published in UC Berkeley Thesis, 2019

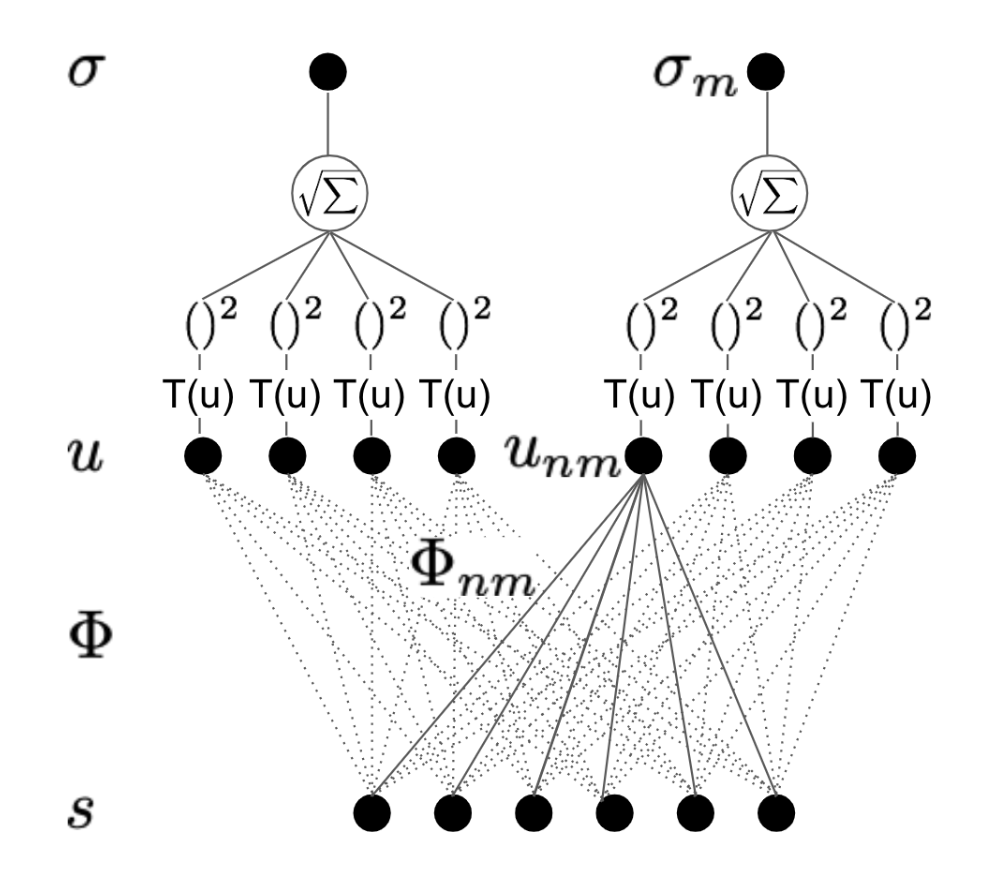

My PhD thesis provides an in-depth account of a recurrent network for sparse inference, including novel analyses, comparisons, and extensions.

Published in Neuro-Inspired Computational Elements, 2020

We present a 2-layer recurrent sparse coding network for learning higher order statistical regularities in natural images.

Published in Journal of Vision, 2020

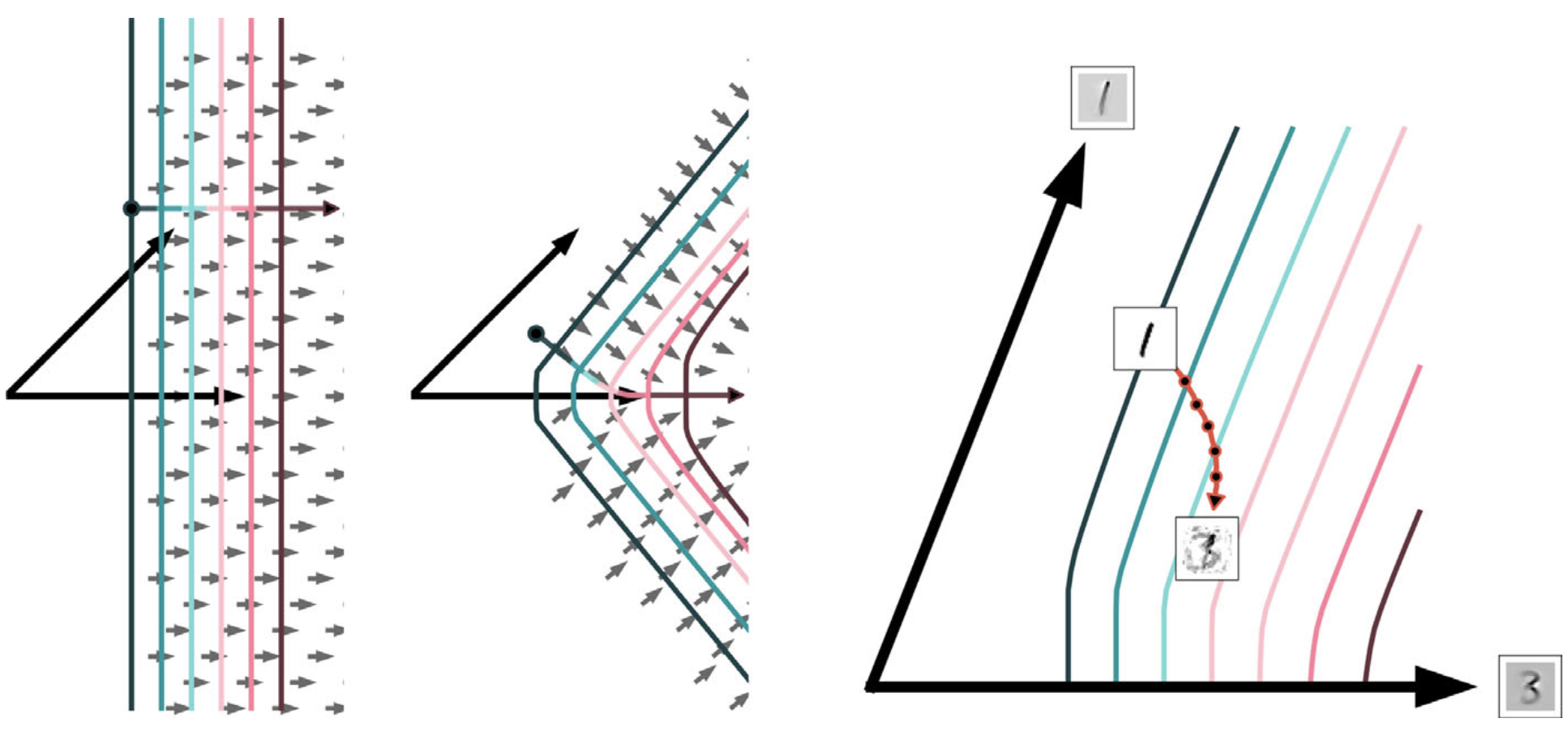

Using differential geometry we explain how sparse coding networks bend their response surfaces, which results in improved selectivity and robustness for individual neurons.

Published in International Conference on Learning Representations, 2021

We construct and analyze new datasets for evaluating disentanglement on natural videos. We also propose a temporally sparse prior for identifying the underlying factors of variation in natural videos.

Published:

Understanding neural computation using differential geometry

Published:

Proposing candidate models for biological scene representation

Published:

We construct an unsupervised learning model that achieves nonlinear disentanglement of underlying factors of variation in naturalistic videos. Previous work suggests that representations can be disentangled if all but a few factors in the environment stay constant at any point in time. As a result, algorithms proposed for this problem have only been tested on carefully constructed datasets with this exact property, leaving it unclear whether they will transfer to natural scenes. Here we provide evidence that objects in segmented natural movies undergo transitions that are typically small in magnitude with occasional large jumps, which is characteristic of a temporally sparse distribution. We leverage this finding and present SlowVAE, a model for unsupervised representation learning that uses a sparse prior on temporally adjacent observations to disentangle generative factors without any assumptions on the number of changing factors. We provide a proof of identifiability and show that the model reliably learns disentangled representations on several established benchmark datasets, often surpassing the current state-of-the-art. We additionally demonstrate transferability towards video datasets with natural dynamics, Natural Sprites and KITTI Masks, which we contribute as benchmarks for guiding disentanglement research towards more natural data domains.

Published:

We introduce subspace locally competitive algorithms (SLCAs), a family of novel network architectures for modeling latent representations of natural signals with group sparse structure. SLCA first layer neurons are derived from locally competitive algorithms, which produce responses and learn representations that are well matched to both the linear and non-linear properties observed in simple cells in layer 4 of primary visual cortex (area V1). SLCA incorporates a second layer of neurons which produce approximately invariant responses to signal variations that are linear in their corresponding subspaces, such as phase shifts, resembling a hallmark characteristic of complex cells in V1. We provide a practical analysis of training parameter settings, explore the features and invariances learned, and finally compare the model to single-layer sparse coding and to independent subspace analysis.

Undergraduate course, University 1, Department, 2014

This is a description of a teaching experience. You can use markdown like any other post.

Workshop, University 1, Department, 2015

This is a description of a teaching experience. You can use markdown like any other post.